Table of Contents

LinearB Engineering Metrics Benchmarks

The LinearB Engineering Metrics Benchmarks were created from a study of 3,694,690 pull requests from 2,022 dev organizations, spanning over 103,807 active contributors.

Introduction to Community Benchmarks

For the first time since DORA’s research in 2014, engineering teams can compare their performance against industry-standard, data-backed benchmarks. Continue reading to learn more about our data collection and metric calculations here.

Understanding Benchmark Categories

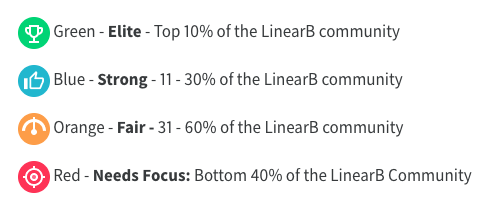

LinearB categorizes engineering performance into four distinct tiers:

Category | Performance Level |

Elite | Best-in-class teams with highly optimized workflows. |

Strong | High-performing teams with room for incremental improvement. |

Fair | Average performance, with key areas for enhancement. |

Needs Improvement | Teams that can significantly optimize their processes. |

Elite | Strong | Fair | Needs Improvement | |

Cycle Time | Average: < 73 hours 75th %: < 19 hours | Average: 73-155 75th %: 19-66 | Average: 155-304 75th %: 66-218 | Average: 304+ 75th %: 218+ |

Coding Time | Average: < 19 hours 75th %: < .5 hours | Average: 19-44 75th %: .5-2.5 | Average: 44-99 75th %: 2.5-24 | Average: 99+ 75th %: 24+ |

Pickup Time | Average: < 7 hours 75th %: < 1 hour | Average: 7-13 75th %: 1-3 | Average: 12-20 75th %: 3-14 | Average: 20+ 75th %: 14+ |

Review Time | Average: < 5 hours 75th %: < .5 hour | Average: 5-14 75th %: .5-3 | Average: 14-29 75th %: 3-18 | Average: 29+ 75th %: 18+ |

Deploy Time | Average: < 6 hours 75th %: < 3 hour | Average: 6-50 75th %: 3-69 | Average: 50-137 75th %: 69-197 | Average: 137+ 75th %: 197+ |

PR Size (number of code changes) | Average: < 219 lines 75th %: < 98 lines | Average: 219-395 75th %: 98-148 | Average: 395-793 75th %: 148-218 | Average: 793+ 75th %: 218+ |

Rework Rate | Average: < 2% | Average: 2-5% | Average: 5-7% | Average: 7+ |

MTTR | Average: <7% | Average: 7-9% | Average: 9-10% | Average: 10+ |

CFR | Average: <1% | Average: 1-8% | Average: 8-39% | Average: 39+ |

Refactor | Average: <9% | Average: 9-15% | Average: 15-21% | Average: 21+ |

Merge Frequency Measured in merges per developer, per week. | >2 | 2-1.5 | 1.5-1 | <1 |

Deploy Frequency Measured in deploys per developer, per week. | >0.2 | 0.2-.09 | .09-.03 | <.03 |

Understanding Percentiles in Benchmarking

LinearB recommends using the 75th percentile (p75) for cycle time analysis as it provides a balanced representation of your organization’s performance without being skewed by extreme outliers.

How Percentiles Are Calculated

Average Calculation:

- (Sum of branch times or PR sizes) / (Total number of branch times or PR sizes)

75th Percentile Calculation (p75):

- 75% of values are below this threshold → p75 ← 25% of values are higher

90th Percentile Calculation (p90):

- 90% of values are below this threshold → p90 ← 10% of values are higher

- Useful for identifying the slowest-performing processes in an organization.

Customizing Benchmark Settings

Configure your LinearB instance to report metrics using:

- Average

- 75th percentile (p75)

- 90th percentile (p90)

To adjust these settings, go to your Account Settings and select your preferred reporting method. For more information, see Changing your metrics from average to median or percentile

Viewing Benchmarks in LinearB

LinearB provides benchmark indicators to help teams assess their performance against industry standards. These benchmarks are displayed in team dashboards and metrics reports, offering insights into overall cycle time and other key engineering metrics.

Dashboard View

• When benchmarks are enabled, a benchmark icon appears next to the cycle-time metric on the team dashboard.

• This allows teams to quickly compare their performance against LinearB’s benchmark standards.

Metrics Report View

• The Metrics Report provides a detailed breakdown of how a team performs against each benchmarked metric.

• Multiple teams can be combined in a metrics dashboard to compare average performance across teams.

LinearB benchmarks are available for the following key engineering metrics:

- Cycle Time

- Coding Time

- Pickup Time

- Review time

- Deploy Time

- Deploy Frequency

- Merge Frequency

- PR Size

- Rework Rate

- MTTR

- CFR

- Refactor

Enabling or Disabling Benchmarks

We understand that benchmarks won't apply or be constructive for all teams. To disable the benchmarks icons for a specific team, go to Team Settings -> General to switch off Engineering Metrics Benchmarks. Make sure to click Save after disabling benchmarks.

Benchmarks may not be relevant for all teams. If needed, they can be disabled for specific teams:

- Navigate to Team Settings > General.

- Toggle “Engineering Metrics Benchmarks” off.

- Click Save to apply changes.

By leveraging benchmarks, teams can identify areas for improvement, optimize workflows, and align performance with industry best practices.

How did we do?

Investment Strategy Dashboard in LinearB

Metrics Inspection in LinearB