AI Iteration Summary for Teams

The AI Team Iteration Summary in Iterations provides a concise, AI-generated overview of your team’s performance across a completed iteration. It highlights key accomplishments, delivery insights, an…

The AI Team Iteration Summary in Iterations provides a concise, AI-generated overview of your team’s performance across a completed iteration. It highlights key accomplishments, delivery insights, and missed goals by combining Git activity with project management data. This helps teams quickly review outcomes, identify areas for improvement, and share results to support continuous improvement.

Summary

- Review a completed iteration in Retro View from Teams → Iterations → Completed.

- Use Delivery Breakdown to see planned vs. added work and completion status.

- Open View AI Iteration Summary to get an AI-written overview, key accomplishments, disruptions, and recommendations.

- Share the summary via a deep link (permission-based) or Markdown.

Prerequisites

Before using AI Team Iteration Summary, ensure the following:

- The team is connected to a Jira or Azure DevOps (ADO) project board, so Iterations can map Git activity to PM issues.

- The team board is configured as a Scrum board to define iteration boundaries and completion states.

- The Team Iteration Summary feature is enabled.

- You have the required permissions to view team data in Iterations.

Accessing the AI Team Iteration Summary

To access the AI Team Iteration Summary:

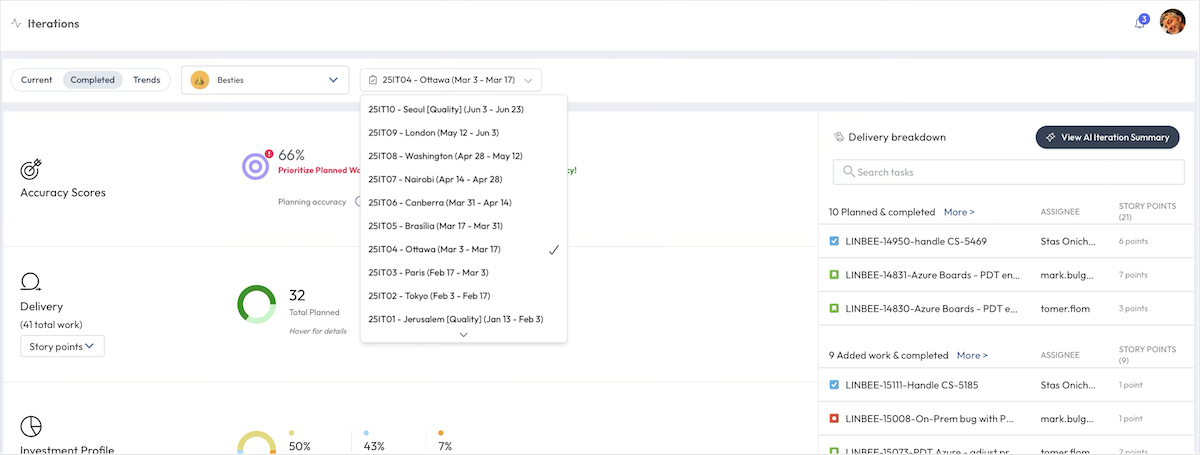

- Go to the Teams tab, then select Iterations → Completed.

- Select a past iteration from the iteration dropdown (for example, “25IT05 – Brasília”).

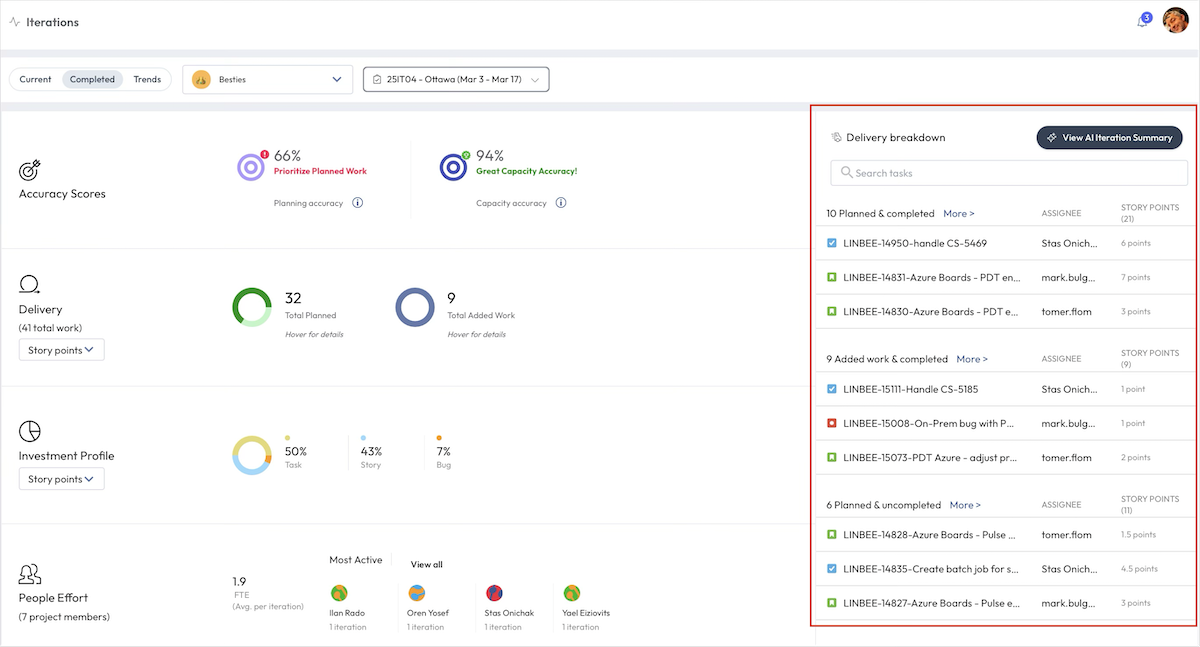

- The view switches to Retro View and shows the Delivery Breakdown panel by default.

Delivery Breakdown View

The Delivery Breakdown view is the default panel when opening the AI Team Iteration Summary in Retro View. It provides a structured breakdown of work that was planned, added, completed, and not completed, linked to both Git activity and PM issues.

This view helps teams assess whether they delivered what they committed to, identify unexpected work, and evaluate delivery consistency across contributors.

The Delivery Breakdown view includes:

-

Issue groupings

- Planned & Completed – Scoped at the start and delivered within the iteration.

- Planned & Uncompleted – Scoped at the start but not completed within the iteration.

- Added Work & Completed – Added after the iteration began and completed during the iteration.

- Added Work & Uncompleted – Added after the iteration began but not completed.

- Assignee – The team member responsible for the issue.

-

Story Points – The effort value from your PM system.

- Tickets without story points (value = None) show as 1 story point by default in LinearB.

- Issue ID & Title – Click to open the issue or PR in its source system (for example, Jira or GitHub).

- PM system icons – Indicates whether the item originates from Jira or Azure DevOps (ADO).

- Delivery tags – Issue type (Bug, Story, Task, etc.) from PM metadata.

- Filtering options – Filter by issue type (Story, Task, Bug) to focus the list.

- Planned work – Issues or story points defined at the start of the sprint.

- Unplanned (Added) work – Items introduced more than 24 hours after the sprint began.

AI Iteration Summary View

The AI Iteration Summary provides a structured, plain-language overview of your team’s sprint performance. It consolidates key delivery insights into a single panel that supports retrospectives, highlights accomplishments, and calls out workflow patterns affecting predictability and throughput.

The AI Iteration Summary is generated after an iteration is completed. Delivery may take up to 24 hours from the iteration end time. In many cases, the summary is available sooner, but generation is not immediate.

Accessing the AI Iteration Summary View

Click View AI Iteration Summary to open the summary panel for the selected completed iteration.

Viewing the AI Iteration Summary

The summary is divided into structured segments that provide both high-level and detailed insights:

Iteration name and dates

Shows the selected iteration name and its start and end dates.

Overview

A short narrative summary of sprint themes, including:

- Planned vs. unplanned work balance

- Capacity vs. planning accuracy

- Notable accomplishments or challenges impacting delivery

Example:

The team completed significant PM Connectors and Vision-related work with a Planning Accuracy of 33% and Capacity Accuracy of 64%. The combination of planned feature work and unplanned production fixes resulted in lower Planning Accuracy while maintaining reasonable capacity utilization.

Key accomplishments

Highlights up to 10 major deliverables, such as:

- Delivered features (with Story Point values)

- Unplanned improvements completed mid-iteration

- Infrastructure upgrades that support team velocity

Example:

- Delivered GitHubIssuesConnector functionality (1 SP)

- Implemented Azure PDT triggers (3 SP)

- Vision iteration summary monitoring (0.5 SP)

- Throttling Vision triggers (1 SP)

- TWA code analysis fixes (2 SP)

Planning disruptions

Calls out factors impacting predictability and scope, including:

- Unplanned work – items added mid-sprint and completed

- Untracked branches – code pushed to branches without linked issues

- Unexpected blockers – coordination issues or delays affecting flow

Example:

10 items of unplanned work, including:

- GitHub Issues Client data collection (LINBEE-13097)

- TWA code change fixes (LINBEE-15316)

- Shortcut org failures in Databricks (LINBEE-15319)

- 10 untracked branches from 5 contributors

PR flow analysis

Highlights review and merge patterns that may affect quality and predictability, such as:

- Pull requests with minimal review

- Delays in merging

- Large PRs merged without adequate vetting

Example:

- PR pm-connectors/590: 460 lines merged in 83 minutes with only 2 minutes of review

- PR linta/1421: 138 lines, merged after 67 hours with a 1-minute review

AI-generated recommendations

Actionable guidance to improve planning and execution in future sprints:

- Reduce planned scope to improve predictability

- Automate enforcement so all PRs are linked to tracked issues

- Use summary signals (planning accuracy, untracked work) to focus retro discussions

Example:

- Reduce planned scope by 20% (approx. 4 SP) to improve forecast accuracy.

- Automate PR tracking to flag unlinked branches and reduce shadow work.

Sharing the iteration summary

You can share the iteration summary in two formats:

- Deep link (permission-based) — Generates a shareable link for users with required access.

- Markdown text — Copies the summary to your clipboard for quick sharing or documentation.

Providing feedback

At the bottom of the summary panel, you can submit feedback to help improve the AI-generated summaries:

- 👍 / 👎 Like or dislike — Feedback is recorded (including iteration context) and used to improve the AI engine.

Related links

How did we do?

AI Insights – Overview

AI Rule Files