AI Code Review Metrics

Track how your team is using gitStream AI—see how many pull requests are reviewed, how often suggestions are accepted, and how many lines of code are improved through AI-assisted reviews.

LinearB’s AI Code Review surfaces potential risks across pull requests, including bugs, security vulnerabilities, performance concerns, and maintainability issues. Unlike traditional rule-based tools that only catch clear-cut problems, the AI takes a broader, more cautious approach.

This means:

- You may see more findings than expected, including some that turn out to be false positives.

- The AI is intentionally over-inclusive to help ensure nothing critical is missed.

- The goal is to provide insight, not to block or approve pull requests.

We designed it this way to help teams stay ahead of issues and make more informed decisions during review.

As the AI continues to learn from real usage and feedback, the number of false positives will decrease over time,

improving precision and relevance in future reviews.

Configuring AI Review

Issues Limit

The Issues Limit setting controls how many issues are included in the generated AI Review comment on a pull request. Select a number to limit issues, or choose Unlimited to include all identified issues.

Example

If AI Review identifies 12 issues and the limit is set to 3, only 3 issues appear in the pull request comment.

Custom Review Guidelines

You can customize how AI Review comments on pull requests by adding guidelines. Guidelines influence what the AI comments on and what it ignores, helping you focus reviews on what matters to your team and reduce noise.

Example guidelines

- Do not comment on formatting issues

- Focus only on security and performance concerns

- Ignore changes in test files

Recommended workflow

To achieve the review behavior you want, use this iterative approach:

- Start with the Playground

- Add specific guidelines, for example, Do not comment on formatting issues

- Run the review in the Playground using a reference pull request from your repository

- Iterate until the output matches your expectations

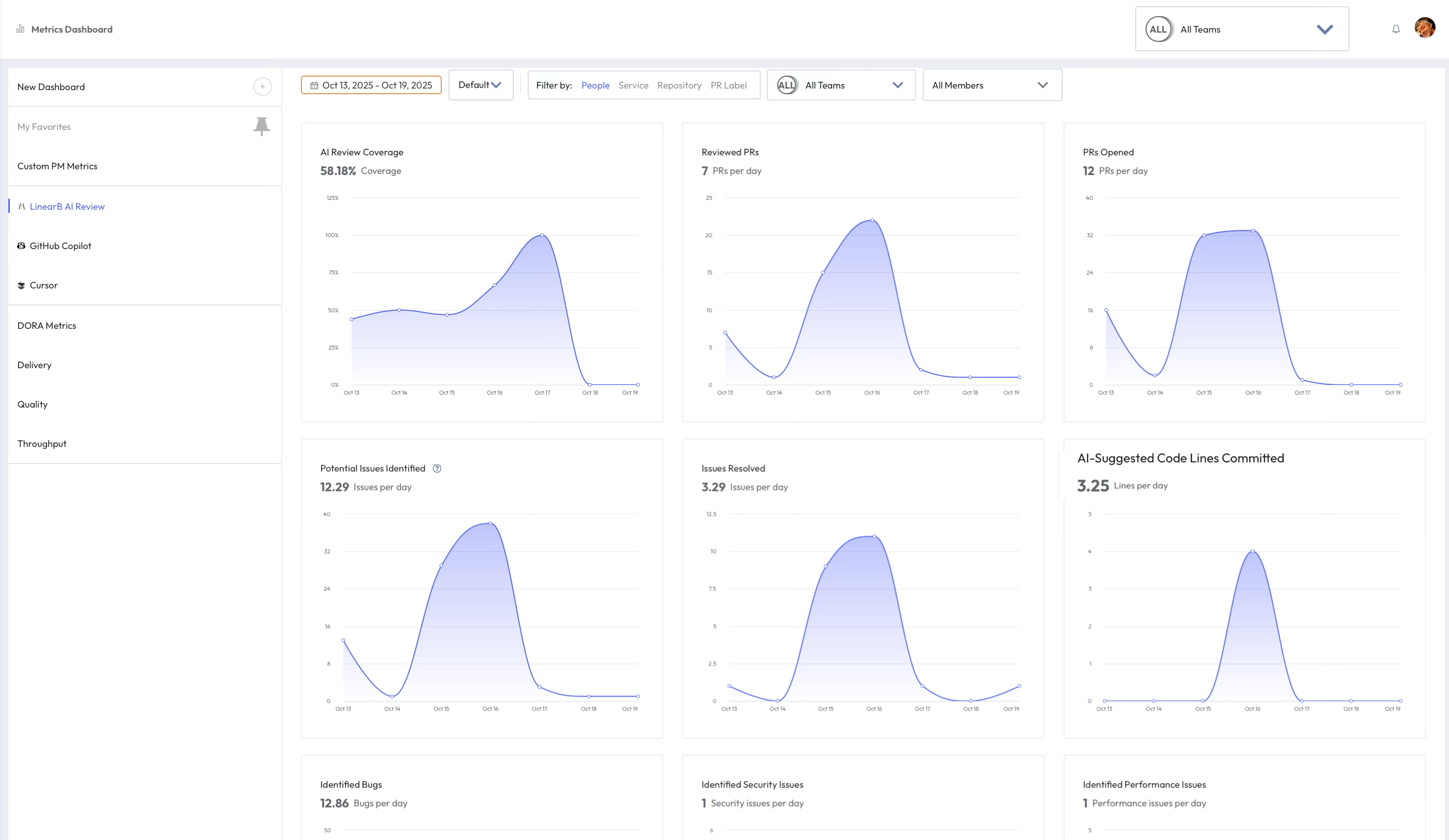

Working with trends and drill downs

LinearB’s AI Code Review engine surfaces insights that go beyond code completion. These findings help identify potential risks and improvement areas across all AI-reviewed pull requests.

You can click any data point on a trend line to open a detailed view of the related pull requests for that day or period. The drill-down view lists affected pull requests, their repositories, and issuers, helping you trace patterns, investigate findings, and take action directly from the data.

Each finding category highlights a different aspect of code quality, enabling engineering leaders and developers to track trends, uncover root causes, and take proactive steps to improve the overall health of the codebase.

You can also click the Share icon to generate a direct link to the filtered view for collaboration or documentation purposes.

Metrics definitions

AI Review Coverage

The percentage of pull requests opened in the selected timeframe that received at least one AI review. This shows how broadly AI code review is applied across new pull requests.

Reviewed PRs

The number of unique pull requests that received at least one GitStream AI review during the selected timeframe. Use this metric to track the breadth of AI review coverage over time. A higher number means more pull requests are benefiting from AI review, while a drop may point to configuration gaps or reduced pull request activity.

PRs Opened

The total number of pull requests created during the selected timeframe. This count includes all pull requests opened across repositories connected to LinearB.

Potential Issues Identified

The number of potential issues identified by GitStream AI during the selected timeframe. This reflects the total findings across all categories (bugs, readability, performance, and more). A higher count indicates more opportunities for improvement, while tracking trends over time helps assess overall code quality.

Issues Resolved

The number of AI-identified issues that were resolved during the selected timeframe. An issue is considered resolved if it was fixed using an AI review suggestion or by the developer independently. LinearB verifies whether an issue is resolved the next time the AI review process runs. This metric helps track adoption and follow-through on AI-flagged findings.

AI-Suggested Code Lines Committed

The number of code lines pushed using the Commit Suggestion option in the AI review to resolve AI-flagged findings.

This value shows how many lines of code were directly committed based on AI Review suggestions. A higher number typically means the AI handled more complex fixes and reflects more developer time saved through automation.

Identified Bugs Issues

This metric highlights logic and correctness issues flagged by the AI Code Review engine, including improper control flow, missing validations, or faulty error handling. These are early indicators of defects that could impact runtime behavior or stability. Investigating frequent bugs may lead to improved review processes, better test coverage, or targeted developer training.

Identified Security Issues

This metric tracks potential security risks identified by the AI engine, such as improper input validation, authentication flaws, insecure communication, or access control issues. It helps security-conscious teams monitor adherence to secure coding practices. Recurring findings may suggest the need for stronger guidelines or security tooling.

Identified Performance Issues

This metric highlights code inefficiencies, such as algorithmic slowdowns, excessive memory usage, or inefficient network or data handling. Monitoring this helps teams catch potential performance regressions before they impact users. Frequent issues may point to opportunities for optimization or improved engineering standards.

Identified Readability Issues

This metric measures issues affecting code clarity, such as naming conventions, structural complexity, or inconsistent formatting. High counts may indicate reduced maintainability or onboarding friction. Findings can help teams improve style guides and promote clearer code practices.

Identified Maintainability Issues

This metric captures structural concerns that affect long-term code health, including tight coupling, duplication, poor modularity, or lack of abstraction. Addressing these early helps reduce technical debt and improve release agility. This data supports strategic refactoring and architectural improvements.

Identified Scope Gaps

This metric detects when the code in a pull request does not fully cover the requirements or acceptance criteria from its linked Jira ticket. It helps ensure that both business and technical requirements are fully implemented before release. Frequent findings may highlight gaps in grooming, unclear specifications, or incomplete development practices.

How did we do?

AI Code Review Findings

Active Branches Metric