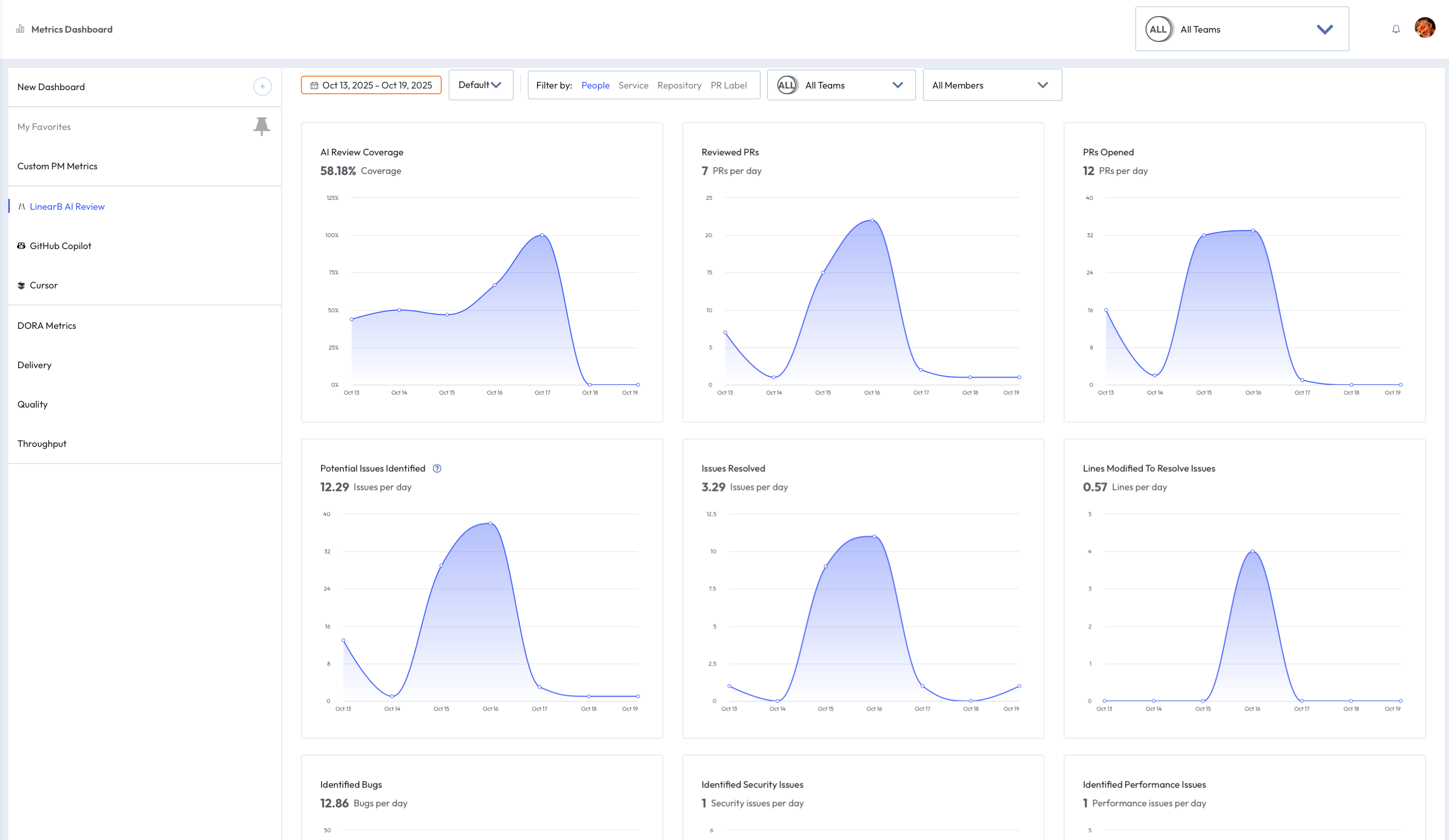

AI Code Review Findings

Gain visibility into how the AI Code Review engine identifies potential issues in your codebase—spanning bugs, security vulnerabilities, performance bottlenecks, and long-term maintainability risks.

LinearB’s AI Code Review surfaces potential risks across pull requests, including bugs, security vulnerabilities, performance concerns, and maintainability issues. Unlike traditional rule-based tools that only catch clear-cut problems, the AI takes a broader, more cautious approach.

This means:

- You may see more findings than expected, including some that turn out to be false positives

- The AI is intentionally over-inclusive to ensure nothing critical is missed

- The goal is to provide insight, not to block or approve PRs

We designed it this way to help teams stay ahead of issues and make more informed decisions during review.

As the AI continues to learn from real usage and feedback, the number of false positives will significantly decrease over time — improving both precision and relevance in future reviews.

LinearB’s AI Code Review engine surfaces insights that go beyond code completion. These findings help identify potential risks and improvement areas across all AI-reviewed pull requests. You can click any data point on a trend line to open a detailed view of the related pull requests for that day or period. The drill-down view lists affected PRs, their repositories, and issuers—helping you trace patterns, investigate findings, and take action directly from the data.

Each finding category highlights a different aspect of code quality, enabling engineering leaders and developers to track trends, uncover root causes, and take proactive steps to improve the overall health of the codebase.

You can also click the Share icon to generate a direct link to the filtered view for collaboration or documentation purposes.

Reviewed PRs

Total number of pull requests reviewed by GitStream AI per day. Use this metric to understand the coverage of AI reviews over time. A high number indicates broad review coverage, while a drop may signal configuration gaps or reduced developer activity.

Potential Issues Identified

The number of potential issues flagged by GitStream AI per day. This reflects the total volume of findings across all categories (bugs, readability, performance, etc.). A high number suggests many areas for improvement, while trends over time can help you measure overall code health.

Issues Resolved

The number of AI-identified issues that were resolved, per day. Helps track how many AI-suggested issues developers are addressing. Can be used as a signal for adoption and follow-through.

Lines Modified to Resolve Issues

Average number of lines modified per day in response to AI findings.

This can indicate the level of effort required to fix issues. A spike may reflect complex fixes or widespread problems, while low numbers may indicate either minor issues or lack of follow-up.

Identified Bugs

This metric highlights logic and correctness issues flagged by the AI Code Review engine, including improper control flow, missing validations, or faulty error handling. These are early indicators of defects that could impact runtime behavior or stability. Investigating frequent bugs may lead to improved review processes, better test coverage, or targeted developer training.

Identified Security Issues

This metric tracks potential security risks identified by the AI engine—such as improper input validation, authentication flaws, insecure communication, or access control issues. It helps security-conscious teams monitor adherence to secure coding practices. Recurring findings may suggest the need for stronger guidelines or security tooling.

Identified Performance Issues

This metric highlights code inefficiencies, such as algorithmic slowdowns, excessive memory usage, or inefficient network/data handling. Monitoring this helps teams catch potential performance regressions before they impact users. Frequent issues may point to opportunities for optimization or improved engineering standards.

Identified Readability Issues

This metric measures issues affecting code clarity—such as naming conventions, structural complexity, or inconsistent formatting. High counts may indicate reduced maintainability or onboarding friction. Findings can help teams improve style guides and promote clearer code practices.

Identified Maintainability Issues

This metric captures structural concerns that affect long-term code health, including tight coupling, duplication, poor modularity, or lack of abstraction. Addressing these early helps reduce technical debt and improve release agility. This data supports strategic refactoring and architectural improvements.

How did we do?

AI Code Review Metrics